-

Notifications

You must be signed in to change notification settings - Fork 0

Model and Learning Process Concepts

The IoT Learning agent is a service that orchestrates the learning and uses Learning Models. The agent is built to load any iterative or micro-batching model. Itself, the agent do not provide an extensive library of implemented models, instead provides tools to add new models.

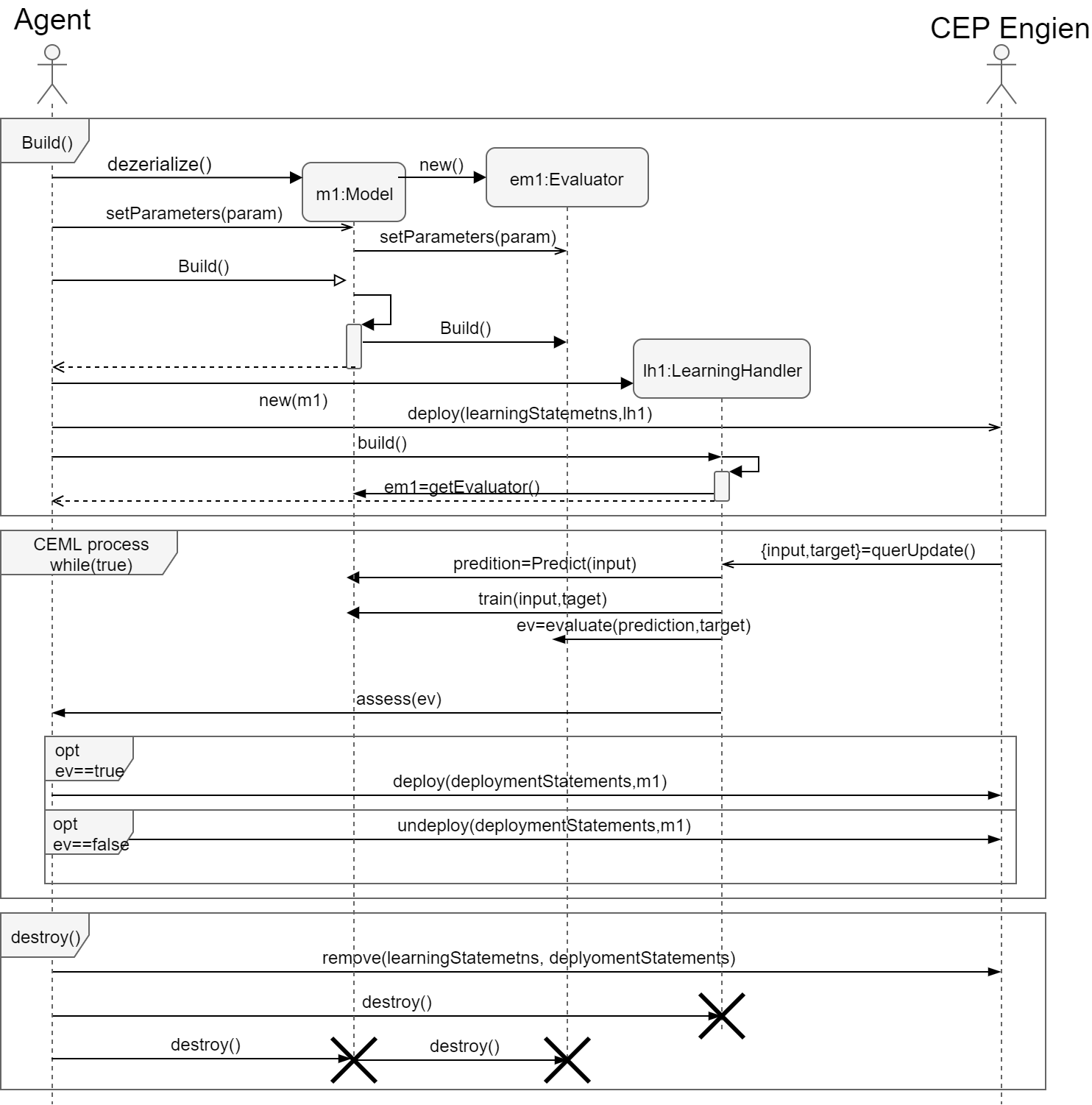

In the IoT Learning Agent the models are trained continuously and time unbounded. Therefore it's essential to understand the lifecycle of the Learning Models. A model has four processes, two of them run at the moment they are invocated, and two are continuous states. The Build process happens when a model is created from scratch or when is loaded from a serialized version of the model. The Destroy process which happens when the user indicates that the model should be destroyed. The other two process are the CEML process. The continuous training which happens everytime a new datapoint of the feature space are collected by the learning streams and used to train, evaluate and assess. Finally, the continuous deployment this process is active so long the assessment of the continuous phase returns true. Below a schematic view of the Model Lifecycle and, afterward, a sequence diagram of the Build, Learning and Destroy processes.

Model Lifecycle Schematic

Learning processes (build, learning, destroy) in the agent

The IoT Learning agent provides a set of Interfaces, Classes, and technologies to integrate any external model. Currently, there are two ways to integrate a model into the IoT Learning framework. The first is by implementing one of the native classes or interfaces of the Learning Agent (see Java Model Integration below). The second methodology is providing a Pyro backend model using the Pyro Model Integrator of the Agent (see Python Model Integration below)

The learning process works using an Interface named Model if this interface is completely and correctly implemented the IoT Learning Agent could load and use it. The agent needs the implementation of the Model Interface in the classpath before this Model is first loaded. The Loading takes place when the model name is mentioned in the Model name part of the CEML request (see CEML Request API (aka CEML API) ). The name either should be a canonical name of the class or the implemented class should be located in 'eu.linksmart.services.event.ceml.models' package. The Model Interface has all functions needed by the Agent, some of them are only understandable by the internal logic of the agent. Therefore, there is the abstract class ModelInstance, that should ease the process of implemented new models. Moreover, the ModelInstance is a general class for all learning types such as supervised and unsupervised, or classification, regression, and clustering. Therefore there are 3 additional implementations of the model implementation bit easier; these are the ClassicationModel, RegressionModel, and ClusteringModel. In case of ModelInstance, ClassicationModel, RegressionModel, or ClusteringModel the need to implement the function void learn(Input input, Input targetLabel) and Prediction predict(Input input). In case of batch learning this two function will used for training one-by-one, it this is not the wished behavior, then the functions void batchLearn(List input, List targetLabel) and List<Prediction> batchPredict(List input) should be overwrite. If ModelInstance, ClassicationModel, RegressionModel, or ClusteringModel is not used, then the corresponding is isClassifier, isRegressor or isClusterer should be implemented. Here there are one example of learning model Auto-Regressive Neural Networks

The integration of external models using python it's as easy to do as for Java, but a bit more complicated to understand and run. Below there is a link to the explanation.

Loading models developed in python is fundamentally different than loading them natively in Java. When the agent loads a Java Models, a class is loaded in runtime and then instantiate inside the Java and Agent execution environment. However, the agent cannot execute natively python-code. Therefore something different must be done. While running the model as the script could be possible, this is highly inefficient. After that, the agent needs a method to keep the python object 'alive', and to do so, the agent uses Pyro. Pyro is a network-based inter-process communication made by the Python community. Pyro constructs a network interface using TCP and binary protocols. With this interface, the agent can execute python code remotely in an efficient manner.

To implement a python model, a model backend must be provided implementing the functions of the PythonAdapter. The functions are almost the same as the ones explained in Model and Learning Process Concepts except for importModel and exportModel. importModel is called when a serialized model is provided in the request, and this serialized model should be loaded when the build() it's called. The function exportModel is called when a serialized version of the model as a string is requested. The function build has parameters differing from the original build function in Java. However, the functionality stays the same. In Java, the parameters are set before called build while in python is during the call.

Using a Python Model is fundamentally different than a java one, .i.e., the agent has another backend subprocess running, and the process must be managed too. Therefore, there are 4 ways to manage this.

The pyro object is executed, managed and only accessible by the agent. The python process runs the model as a subprocess of the agent in the same container or environment where the agent is placed (if the agent has the administrative right to execute and access python if not fails). This method is simple, but the python process will be entirely managed by the agent.

Alternatively, the pyro object can be run independently and register to a name server. To do so first a name server is started (see Pyro Name Server) or a container of it (see Pyro-ns image). Afterward, the name can be given in the request to the agent, which is located using the name server. An example in pyro-boilerplate

A Pyro REST API endpoint can be provided to the agent in the request such that the agent has access to an external execution environment to spawn new pyro objects. The implementations (in java and python) of this service can be located here PythonManager.

Same as the option above, except that instead of giving the rest endpoint the service Id is given. Then the agent used the service catalog to search the service endpoint. This function only works if a Service Catalog is available.

As getting started a boilerplate was developed using the Lookup method:

Originally written by José Ángel Carvajal Soto.