-

Notifications

You must be signed in to change notification settings - Fork 715

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

kubeadm init waiting for the control plane to become ready on CentOS 7.2 with kubeadm 1.6.1 #228

Comments

|

I'm having the same issue. I also tried removing the Network ARGS as suggested on another issue. It still hangs at |

|

Did you reload Daemon and restart kubelet service after making the changes. It worked after changing the driver and commenting network. It takes 10 to 11 minutes for the control plane to get ready for the first time for me, I would suggest leaving it for 15 minutes for the first time. |

|

I did reload the daemon and restarted the kubelet service every single time. I have even left the setup undisturbed for entire night but it was still waiting for the control plane. |

|

I've reloaded daemon ( Apiserver and etcd docker containers fail to run after commenting out the networking options. I also tried installing weave-net manually so the cni config directory would be populated, but that didn't work neither. To do this i installed weave, ran |

|

Looks like kubelet cannot reach kube api server. |

|

I noticed that etcd wasn't able to listen on port 2380, i've followed these steps again and my cluster started up:

If you want to get rid of managing weave by hand...

Kubeadm join should work on other servers. |

|

@Yengas Can you please provide more details on the weave steps? Did you run them on all nodes, or just the master? |

|

@jruels just the master node. Weave is just a single binary. The setup command without any args, downloads weave docker images and creates the CNI config. The launch command without any args starts the weave containers on host only. |

|

@Yengas Still not sure, what you mean by - "get and install weave. Don't run it" I cannot obviously do kubectl apply -f https://git.io/weave-kube-1.6 so how do I install weave? |

|

What do the apiserver logs say? |

|

@rushabh268 |

|

you don't need to do that. kubectl apply -f https://git.io/weave-kube-1.6 should be sufficient. |

|

The API server logs say the exact same as I mentioned in the bug. Also, I cannot do kubectl because the Kubernetes is not installed |

|

@jruels I'll try it out and update this thread! |

|

In the bug description there are kubeadm logs and kubelet logs. There are no apiserver logs. |

|

@mikedanese How do I get the apiserver logs? |

I'm having the same issue reported here.

|

|

@acloudiator I think you need to set cgroup-driver in kubeadm config. And then restart the kubelet service |

|

It would be great if kubeadm could in some way deal with the cgroup configuration issue.

|

|

Just an update, whatever workaround I tried didn't work. So I moved to CentOS 7.3 for the master and it works like a charm! I kept the minions on CentOS 7.2 though. |

|

@rushabh268 hi, I have the same issue on Redhat Linux 7.2. After updating the systemd, this problem is solved. You can try to update systemd before installation. |

|

I hit this issue on CentOS 7.3. The problem is gone after I uninstall docker-ce and then install docker-io. |

|

@ZongqiangZhang I have docker 1.12.6 installed on my nodes. @juntaoXie I tried updating systemd as well and its still stuck |

|

So I've been running Centos 7.3 w/1.6.4 without issue on a number of machines. Did you make certain you disabled selinux? |

|

@timothysc I have CentOS 7.2 and not CentOS 7.3 and selinux is disabled |

|

@albpal I had exactly the same issue a week ago: In my case the wrong IP address was referring to another KVM on the same physical server. Can be something to do with DNS on a physical machine, which may require a workaround inside kube api or kube dns, otherwise starting a cluster becomes a huge pain for many newcomers! I've wasted a few evenings before noticing What can cause this very odd mismatch in IP addresses? |

|

@albpal Please open a new issue, what you described and what this issue is about are separate issues (I think, based on that info) |

|

@kachkaev I have just checked what you suggested. I found that the wrong IP ends at a CPANEL: vps-1054290-4055.manage.myhosting.com. On the other hand, my VPS's public IP is from Italy and this wrong IP is from USA... so despite the fact that the wrong IP has something related with hosting (CPANEL) It doesn't seem to be referring to another KVM on the same physical server. Was you able to install k8s? @luxas I have the same behavior but I copied the docker logs output too. Both /var/log/messages and kubeadm init's output are the same that the original issue. |

|

@albpal so your VM and that second machine are both on CPANEL? Good sign, because then my case is the same! The fact that it was the same physical machine could be just a co-incident. I used two KVMs in my experiments, one with Ubuntu 16.04 and another with CentOS 7.3 Both had the same apt-get install dnsmasq

rm -rf /etc/resolv.conf

echo "nameserver 127.0.0.1" > /etc/resolv.conf

chmod 444 /etc/resolv.conf

chattr +i /etc/resolv.conf

echo "server=8.8.8.8

server=8.8.4.4" > /etc/dnsmasq.conf

service dnsmasq restart

# reboot just in caseThis brought the right ip address after In any case, it feels wrong to do an extra step of re-configuring DNS because it's very confusing (I'm not a server/devops guy and that whole investigation I went through almost made me crying 😨). I hope kubeadm will once be able to detect if the provider's DNS servers are working in a strange way and automatically fix whatever needed in the cluster. If anyone from the k8s team is willing to see what's happening, I'll be happy to share root access on a couple of new FirstVDS KVMs. Just email me or DM on twitter! |

|

Thanks @kachkaev ! I will try it tomorrow |

|

cc @kubernetes/sig-network-bugs Do you have an idea of why the DNS resolution fails above? Thanks @kachkaev we'll try to look into it. I don't think it's really kubeadm's fault per se, but if many users are stuck on the same misconfiguration we might add it to troubleshooting docs or so... |

|

My logs are very likely to @albpal logs. |

|

I have been able to fix it!! Thanks so much @kachkaev for your hints! I think the problem was: ### Scenario: resolv.conf There is not any searchdomain! hosts As per logs, kubernetes container tries to connect to: Get https://localhost:6443/api/v1/secrets?resourceVersion=0 And when I ask for: So I think that these containers are trying to resolve localhost (without domain) and they are getting a wrong IP. Probably because "localhost.$(hostname -d)" is resolving to that IP which I think it's gonna happen on almost any VPS services. ## What I did to fix the issue on a VPS CentOS 7.3 (apart of those steps showed at https://kubernetes.io/docs/setup/independent/install-kubeadm/#installing-kubelet-and-kubeadm): As root:

I added the hostname -i at step 5 because if I don't, docker will add 8.8.8.8 to resolv.conf on the containers. I hope it helps to others as well. Thanks!! |

|

Glad to hear that @albpal! I went through your steps before systemctl disable firewalld

systemctl stop firewalldI do not recommend doing this because I'm not aware of the consequences - this just helped me to complete a test on an OS I normally don't use. Now I'm wondering what could be done to ease the install process for newbies like myself. The path between getting stuck at |

|

@Paulobezerr from what I see in your attachment I believe that your problem is slightly different. My apiserver logs contain something like: reflector.go:190] k8s.io/kubernetes/pkg/kubelet/config/apiserver.go:46: Failed to list *v1.Pod:

Get https://localhost:6443/api/v1/pods?fieldSelector=spec.nodeName%3Dhostname&resourceVersion=0:

dial tcp RANDOM_IP:6443: getsockopt: connection refusedwhile yours say: reflector.go:190] k8s.io/kubernetes/pkg/kubelet/config/apiserver.go:46: Failed to list *v1.Pod:

Get https://10.X.X.X:6443/api/v1/pods?fieldSelector=spec.nodeName%3Dhostname&resourceVersion=0:

dial tcp 10.X.X.X:6443: getsockopt: connection refused(in the first case it is Unfortunately, I do not know what to advise except trying various Hope you get this sorted and share the solution with others! |

|

@kachkaev I forgot to mention I've applied the following firewall rule: $ firewall-cmd --zone=public --add-port=6443/tcp --permanent && sudo firewall-cmd --zone=public --add-port=10250/tcp --permanent && sudo systemctl restart firewalld It works fine on my environment when applied this rule without deactivating the firewall. I'll add it to my previous comment to collect all the needed steps. |

|

@juntaoXie Thanks. Updating systemd version per your comment worked for me. |

|

Still getting this issue for two days now, I am running all this behind a proxy and there doesn't seem to be a problem. This is also not a cgroup problem, both docker and my kubelet service use systemd. |

|

FWIW, I had this same problem on GCP i tried using Ubuntu 16.04 and CentOS using the following commands in a clean project: $ gcloud compute instances create test-api-01 --zone us-west1-a --image-family ubuntu-1604-lts --image-project ubuntu-os-cloud --machine-type f1-micro --description 'node 1 for api testing' $ gcloud compute instances create test-api-02 --zone us-west1-b --image-family ubuntu-1604-lts --image-project ubuntu-os-cloud --machine-type f1-micro --description 'node 2 for api testing' $ gcloud compute instances create test-api-03 --zone us-west1-c --image-family ubuntu-1604-lts --image-project ubuntu-os-cloud --machine-type f1-micro --description 'node 3 for api testing' $ apt-get update $ curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add - $ apt-get update && apt-get install -qy docker.io && apt-get install -y apt-transport-https $ echo "deb http://apt.kubernetes.io/ kubernetes-xenial main" > /etc/apt/sources.list.d/kubernetes.list $ apt-get update && apt-get install -y kubelet kubeadm kubernetes-cni $ systemctl restart kubelet $ kubeadm init So after beating my head against it for several hours I ended up going with: $ gcloud beta container --project "weather-177507" clusters create "weather-api-cluster-1" --zone "us-west1-a" --username="admin" --cluster-version "1.6.7" --machine-type "f1-micro" --image-type "COS" --disk-size "100" --scopes "https://www.googleapis.com/auth/compute","https://www.googleapis.com/auth/devstorage.read_only","https://www.googleapis.com/auth/logging.write","https://www.googleapis.com/auth/monitoring.write","https://www.googleapis.com/auth/servicecontrol","https://www.googleapis.com/auth/service.management.readonly","https://www.googleapis.com/auth/trace.append" --num-nodes "3" --network "default" --enable-cloud-logging --no-enable-cloud-monitoring --enable-legacy-authorization I was able to get a cluster up and running where I couldn't from a blank image. |

|

Same problem as @Paulobezerr - my env: CentOS 7.4.1708 kubeadm version: &version.Info{Major:"1", Minor:"8", GitVersion:"v1.8.0", GitCommit:"6e937839ac04a38cac63e6a7a306c5d035fe7b0a", GitTreeState:"clean", BuildDate:"2017-09-28T22:46:41Z", GoVersion:"go1.8.3", Compiler:"gc", Platform:"linux/amd64"} For me this issue was not running with SELinux disabled. The clue was his steps, the comment: edit /etc/selinux/config and set SELINUX=disabledThe install steps here (https://kubernetes.io/docs/setup/independent/install-kubeadm/) for CentOS say: For me, even though I had run setenforce 0, I was still getting the same errors. Editing /etc/selinux/config and setting SELINUX=disabled, then rebooting fixed it for me. |

|

There seems to be a lot of (potentially orthogonal) issues at play here, so I'm keen for us to not let things diverge. So far we seem to have pinpointed 3 issues:

Once all three are addressed with PRs, perhaps we should close this and let folks who run into problems in the future create their own issues. That will allow us to receive more structured information and provide more granular support, as opposed to juggling lots of things in one thread. What do you think @luxas? |

|

For me, i went with docker 17.06 (17.03 is recommended, but not available at docker.io) and ran in the same problem. Upgrading to 17.09 magically fixed the issue. |

|

As this thread have got so long, and there are probably a lot of totally different issues, the most productive thing I can add besides @jamiehannaford's excellent comment is that please open new, targeted issues with all relevant logs / information in case something fails with the newest kubeadm v1.8, which automatically detects a faulty state much better than earlier versions. We have also improved our documentation around requirements and edge cases that hopefully will save time for people. Thank you everyone! |

|

i had the same issue with 1.8 in CENTOS 7 with 1.8 ? anyone had the same issue or know how to fix. |

|

@rushins If you want to get help with the possible issue you're seeing, open a new issue here with sufficient details. |

|

I got the same issue as @rushabh268 which is Env: |

|

Please for the love of god add this to the docs. I have been trying to setup my cluster for many nights after work under the guise of "having fun and playing with technology" I was done in by the master node not getting the correct IP when running nslookup localhost. Thank you to @kachkaev for the solution. |

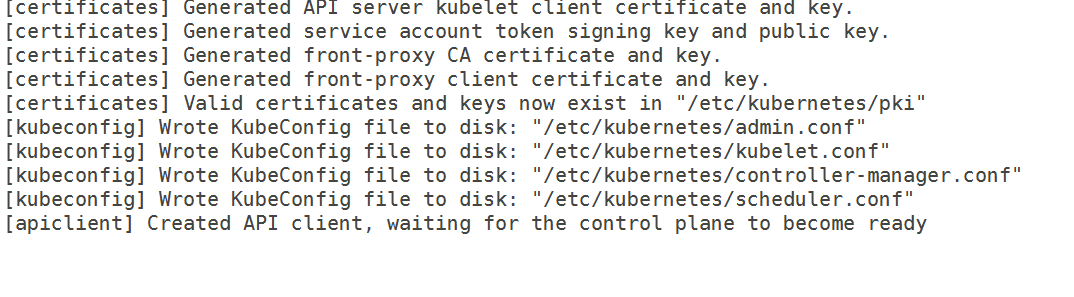

After downloading kubeadm 1.6.1 and starting kubeadm init, it gets stuck at [apiclient] Created API client, waiting for the control plane to become ready

I have the following 10-kubeadm.conf

So, its no longer a cgroup issue. Also, I have flushed the iptables rules and disabled selinux. I have also specified the IP address of the interface which I want to use for my master but it still doesn't go through.

From the logs,

Versions

kubeadm version (use

kubeadm version):kubeadm version

kubeadm version: version.Info{Major:"1", Minor:"6", GitVersion:"v1.6.1", GitCommit:"b0b7a323cc5a4a2019b2e9520c21c7830b7f708e", GitTreeState:"clean", BuildDate:"2017-04-03T20:33:27Z", GoVersion:"go1.7.5", Compiler:"gc", Platform:"linux/amd64"}

Environment:

Kubernetes version (use

kubectl version):Cloud provider or hardware configuration:

Bare metal nodes

OS (e.g. from /etc/os-release):

cat /etc/redhat-release

CentOS Linux release 7.2.1511 (Core)

Kernel (e.g.

uname -a):uname -a

Linux hostname 3.10.0-327.18.2.el7.x86_64 kubeadm join on slave node fails preflight checks #1 SMP Thu May 12 11:03:55 UTC 2016 x86_64 x86_64 x86_64 GNU/Linux

Others:

docker -v

Docker version 1.12.6, build 96d83a5/1.12.6

rpm -qa | grep kube

kubelet-1.6.1-0.x86_64

kubernetes-cni-0.5.1-0.x86_64

kubeadm-1.6.1-0.x86_64

kubectl-1.6.1-0.x86_64

What happened?

Kubeadm getting stuck waiting for control plane to get ready

What you expected to happen?

It should have gone through and finished the init

The text was updated successfully, but these errors were encountered: