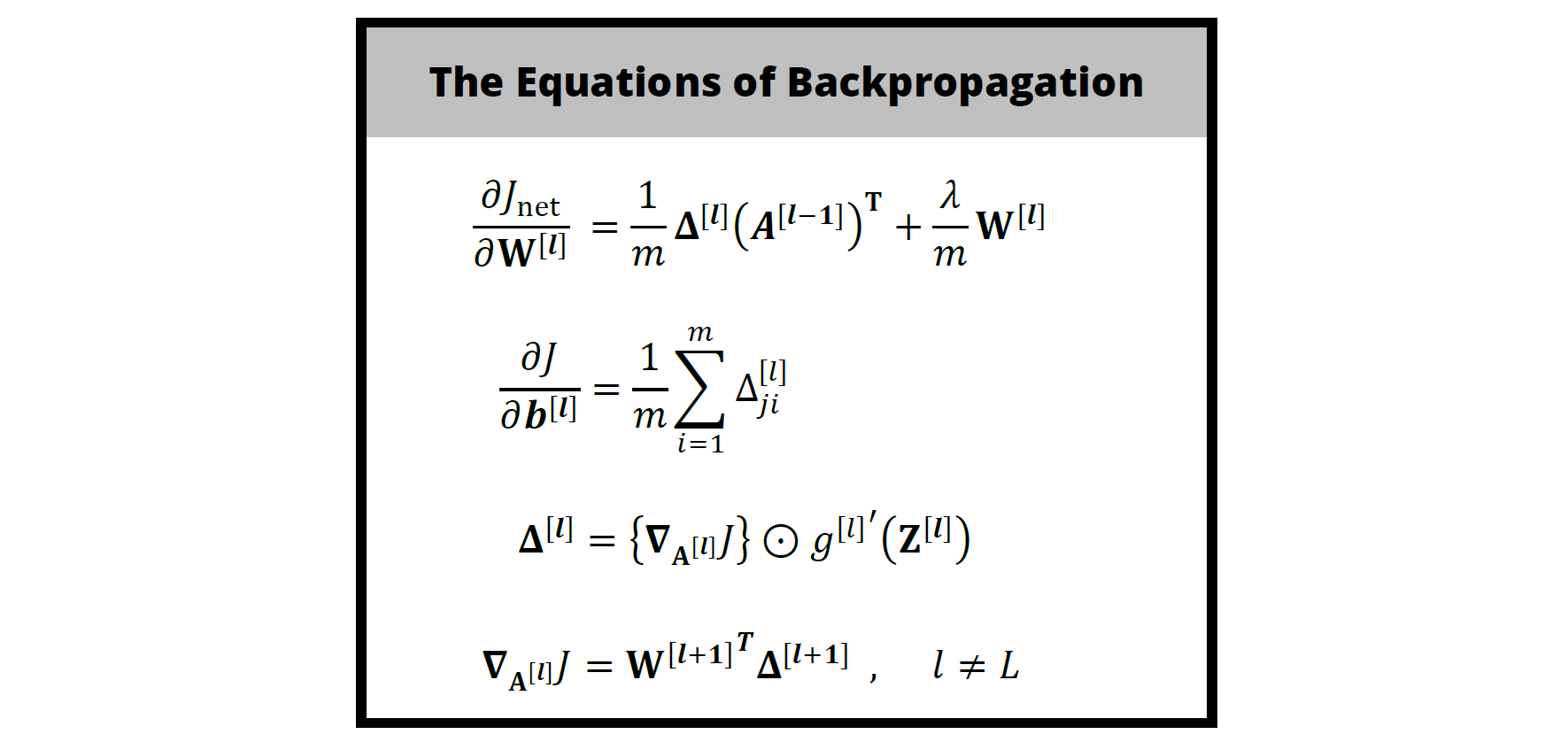

Andrew Ng's Coursera courses on Machine Learning and Deep Learning provide only the equations for backpropagation, without their derivations. To accompany my studies, I have written up full derivations of the backpropagation algorithm (for differentiating a neural network's cost function) as they apply to both the Stanford Machine Learning course and the deeplearning.ai Deep Learning specialization.

My first derivation here, which is just an excerpt from my lecture notes, was motivated mostly by the need to make sense of the strange notation and network structure (e.g. using bias nodes rather than bias vectors) used throughout the Machine Learning Coursera course offered by Stanford.

My second derivation here formalizes, streamlines, and updates my derivation so that it is more consistent with the modern network structure and notation used in the Coursera Deep Learning specialization offered by deeplearning.ai, as well as more logically motivated from step to step. In other words, it better shows how the backpropagation algorithm might be obtained from first principles if we had never heard of the algorithm before.