Notes on using/applying machine learning

-

Images (DL)

- Center (subtract the mean image or per channel mean)

- Not common to dp PCA, normalizing etc.

-

A simple way to deal with outliers python

- Numerical: log, log(1+x), normalization, binarization

- Categorical: one-hot encode, TF-IDF(text), weight of evidence

- Timeseries: stats, MFCC(audio), FFT

- Numerical/Timeseries to categorical - RF/GBM

- Squared loss minimizes expectation. Pick it when learning expected return on a stock.

- Logistic loss minimizes probability. Pick it when learning probability of click on advertisement.

- Hinge loss minimizes the 0,1 / yes-no question. Pick it when you want a hard prediction.

- Quantile loss minimizes the median. Pick it when you want to predict house prices.

- Ensembling multiple loss functions

Adapted from: https://github.com/JohnLangford/vowpal_wabbit/wiki/Loss-functions

- If you are usin ReLu, it is good practice to

- initialize them with slightly positive bias to avoid dead neurons (small networks).

- Xavier-He initialization

W = np.random.rand(fan_in, fan_out) / np.sqrt(fan_in/2)(recommended).

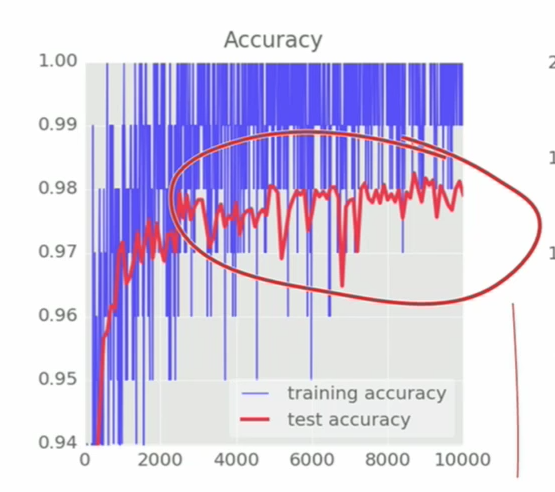

- To sanity check the network, start witha very small data (20 images) and no regularization and overfit the model. You should be able to see

accuracy ~= 1andloss ~= 0if everything is fine. - If we have a high learning rate, (basic) SGD has big steps and it is progressing fast. Solution: use decaying learning rate; ADAM and such.

- decay lr overtime.

- Getting some ideas: in training, start with two extreme lrs (

1e-6,1e6) and narrow it down by adjust it incremantally (binary search like) until the loss is declining in a sensible way (not staying flat, not exploding, no NaNs, exceptions etc). - Fine tuning: here

- Prefer random search over grid search.

Causes:

- Too many neurons

- Not enough data

- Bad network

To cure overfitting:

- Dropout (note: some noise may come back. Not recommended with conv nets.)

- Data augmentation(add noise to data)

Data in real world: large values, different scales, skewed, correlated

Solution:

-

Data whitening:

- Scale: center around zero

- Decorrelate: (A+B)/2, A-B (PCA etc)

- Add an additional layer and let NN do it (to determine what the clean data is)

-

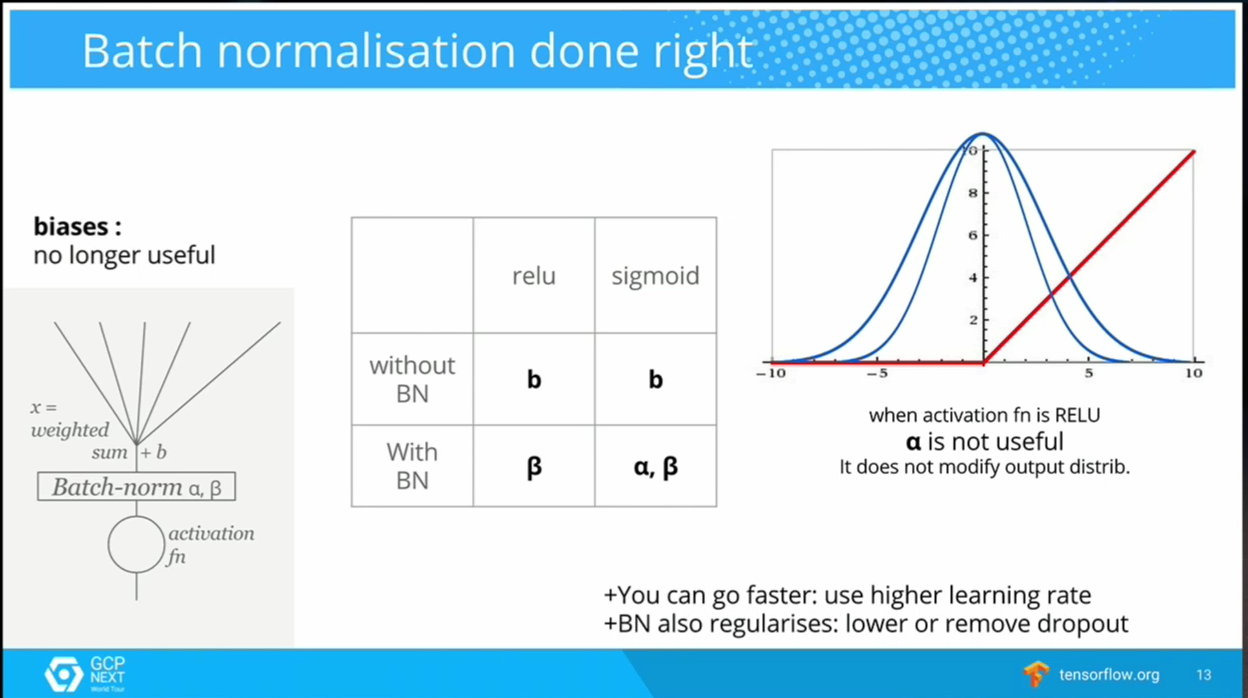

Batch normalization (better option)

Note:

- with batch normalization, bete replace bias so don't use bias when batch normalizing.

- you can use higher lrate

- stop using (or do a little) dropout

- Ensambling independant models increases the accuracy ~2%

- You can also ensamble saved checkpoints of a model

- Feature extracting / fine tuning existing network: Caffe

- Cpmplex uses of pretrained models: Lassagne or Torch

- Write your own layers: Torch

- Crazy RNNs: Theano or Tensorflow

- Huge model, need parallelism: Tensorflow