-

Notifications

You must be signed in to change notification settings - Fork 18

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Question about ACFM #6

Comments

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

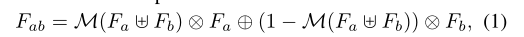

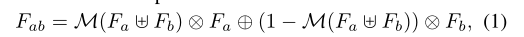

Hi, thanks for your excellent work. In this article, I have a little confusion about ACFM. Why do you use 1-M as a weighting factor to multiply Fb in the formula of the article, instead of multiplying it by M as Fa.

The text was updated successfully, but these errors were encountered: