-

Notifications

You must be signed in to change notification settings - Fork 15

Unable to copy to amazon while adding data #122

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Comments

|

I'm looking into it @sandrinebedard, hopefully it's something simple. |

Reviewing PolicyHere is the IAM policy @RignonNoel set up yesterday:

|

TracingI turned up debugging And saw: which tells me that the failure is in the request. Which doesn't really tell me anything new, except that it rules out git-annex doing some weird extra step it needs extra permissions for in the background. |

|

|

Can I just upload anything, without git-annex? Ah! Yes I can. Sort of. The link doesn't work, it gives 403 (not 404, 403!, so the data is there it's just locked): This reminds me of a problem long ago. Because we're using the

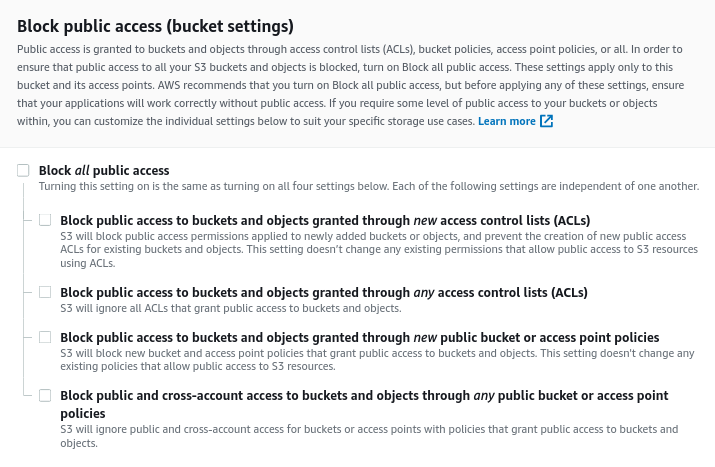

so maybe that's a red-herring. I wonder if the problem is that Sandrine lacks permissions to mark data as public; this wouldn't show up with the other dataset backups because those aren't trying to make public data, I only ever access them using Access Keys set up with permissions specifically for the backups. Indeed, in the trace above you can set git-annex trying to set which corresponds to Unfortunately it's not obvious what permissions we're lacking, then, because the 'admin' policy is simply: But there we go, I can reproduce it with Okay so now I should be able to google it, and after about 10 minutes of search I found this SO post which seems to be pretty close, though not an exact match for this problem. Their problem was they had Block Public Access enabled, which we don't. We currently have and but I wonder if we should set instead; I think probably then we need to write a Policy attached to the Bucket instead of to the Users, though, which I would need to google around to learn how to do. |

|

@kousu i'm sure you've looked into this and sorry if this is a trivial comment, but could it be related to a git-annex version issue? |

|

I've isolated it to an S3 policy issue. |

|

If I try to turn off ACLs it tells me I need to delete them first: But that seems way better overall. We shouldn't have "sandrine uploaded this file" and "alex uploaded that file" lurking in the background. So I'm going to figure out how to do that, and maybe that will just clear up the original problem in the process. If not, it'll make it simpler to solve. |

|

Going to try to follow https://docs.aws.amazon.com/AmazonS3/latest/userguide/object-ownership-migrating-acls-prerequisites.html Those docs are terrible, but I fumbled by way through creating this bucket policy: and then it turned out I just had to uncheck the "Everyone" ACL: Then I was able to turn on "BucketOwnerEnforced" which disables ACLs: Now let's see if that made things better for Sandrine: Okay so no more ACLs, as expected. The upload worked: This link also works for me in my browser. Does it work with git-annex though? No, not yet: So, a new error. And the link works I've reported this upstream, since Amazon's deprecation of ACLs means git-annex's docs are out of date, or at least aging. Hopefully JoeyH has some time to deal with this without feeling pressured to take shortcuts... I'll just upload the fix on our end, which again was @sandrinebedard this should now be working for you. Can you please try again? |

|

Er but you better |

|

@kousu I did When running I get: |

|

@sandrinebedard, hm you might need to If that doesn't work |

|

I've given data-single-subject the same treatment. To test if it's behaving I checked out a copy and did Then tried to upload: which looks good: Then I went into IAM and added the |

|

I've also deleted the |

|

This did not work However, It worked!!! (still on going since there are a lot of images, but no errors!) |

|

Great! I'm pretty confident this is done then. tl;dr: I'm pretty sure the problem was the policy created yesterday was missing the The reason we never ran into this before is because @sandrinebedard is the first non-admin data curator we've onboarded. Everyone always had full permissions before. |

|

Setting

For example: However, the actual file does allow public access: So the problem is on git-annex's side. It's because So we have a catch 22: we can't disable ACLs with and have contributors with |

|

Another problem: somehow we ended up with two copies of the amazon remote: It looks like maybe it was ...a..bad merge? but running |

|

Reported upstream again at https://git-annex.branchable.com/bugs/S3_ACL_deprecation/, including the note that |

|

Our current understanding:

So, as long as |

|

I think the docs are a good enough workaround for now. Thanks a lot for taking the time to write them up! I like all the helpful comments on each line. |

|

A note for the future: according to a git-annex tip, it should be possible to configure two separate remotes for our Amazon S3 bucket, one with |

Context

To uplaod new data, I ran the command:

I get the following error:

I added my AWS credentials as stated here: https://github.com/spine-generic/spine-generic/wiki/git-annex#images-niigz

The text was updated successfully, but these errors were encountered: