-

Notifications

You must be signed in to change notification settings - Fork 957

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Google's Tacotron2 published #90

Comments

|

Yeah, I heard their audio. It sounds pretty amazing! I'm hoping to find some time to try to implement it next week. In some ways it's a simpler architecture, for example they got rid of the CBHGs. And they use WaveNet as a vocoder, but it's trained independently after the main model is trained, so hopefully it won't be too hard to get something working... |

|

I am so glad to hear that you will implement it. And i'm willing to help you finish it together : ) |

|

I've been trying to reproduce the Deep Voice WaveNet with Tacotron. I would also love to volunteer. I'm currently working on parallel wavenet. |

|

It would be very helpful. |

|

Wishing you all a prosperous 2018! ;) |

|

This could be a problem. The old wavenet vocorder takes to way to long but Google released a new wavenet. It uses probably density distillation along with other things. This wavenet vocorder is faster that realtime (1000 times faster than the original). It is outlined here and I have yet to see anyone implement it .https://deepmind.com/blog/high-fidelity-speech-synthesis-wavenet/ |

|

@keithito We would LOVE to see some Tacotron2 implementation here if you have a little time for it. |

|

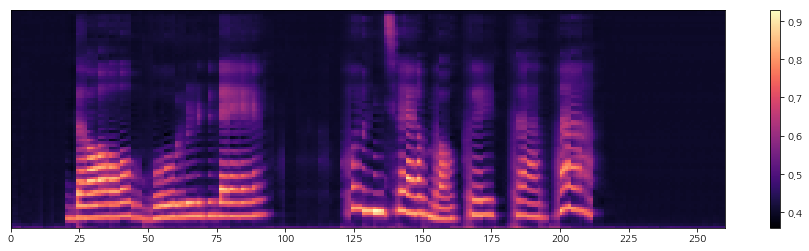

Hi, I just pushed a branch https://github.com/keithito/tacotron/tree/tacotron2-work-in-progress It's kind of a hybrid of Tacotron 1 and 2. The encoder, decoder, and attention mechanism are from the Tacotron 2 paper. However, it's still using Griffin-Lim to go from spectrogram to waveform. I was hoping to be able to use an existing open source WaveNet implementation, but I couldn't find one that runs in real-time. If anyone knows of one, please let me know. The location-sensitive attention seems like it leads to cleaner alignments: It's only been training for 143k steps, but I think it's already sounding pretty good. We'll see how it goes... |

|

https://github.com/r9y9/wavenet_vocoder, Looks like it works |

|

@keithito I hope you don't mind sharing your CKPT files for this (you're probably up to more than 143K GS by now)? I used your hybrid Tacotron 1 / Tacotron 2 to train from scratch using Nancy dataset, though even if I'm now at 335K GS, it isn't producing intelligible audio (unlike yours at 143K GS). Would be good to start training with Nancy dataset from your checkpoint. :) |

|

@keithito after how many iterations did the attention start to show proper alignment? |

|

@rafaelvalle it was around 15k steps. Attention plots are attached: attention.zip |

|

@MXGray: here's a checkpoint after 800k steps: |

|

@keithito Thanks a lot! I'll share the trained Nancy model under hybrid Tacotron 1 & 2 once I get good results. |

|

@keithito

I double checked directory and file paths. Everything's correct. Was your 800K model trained using your hybrid Tacotron 1 / 2? Or, was it trained using your original implementation? Please advise. Thanks! |

|

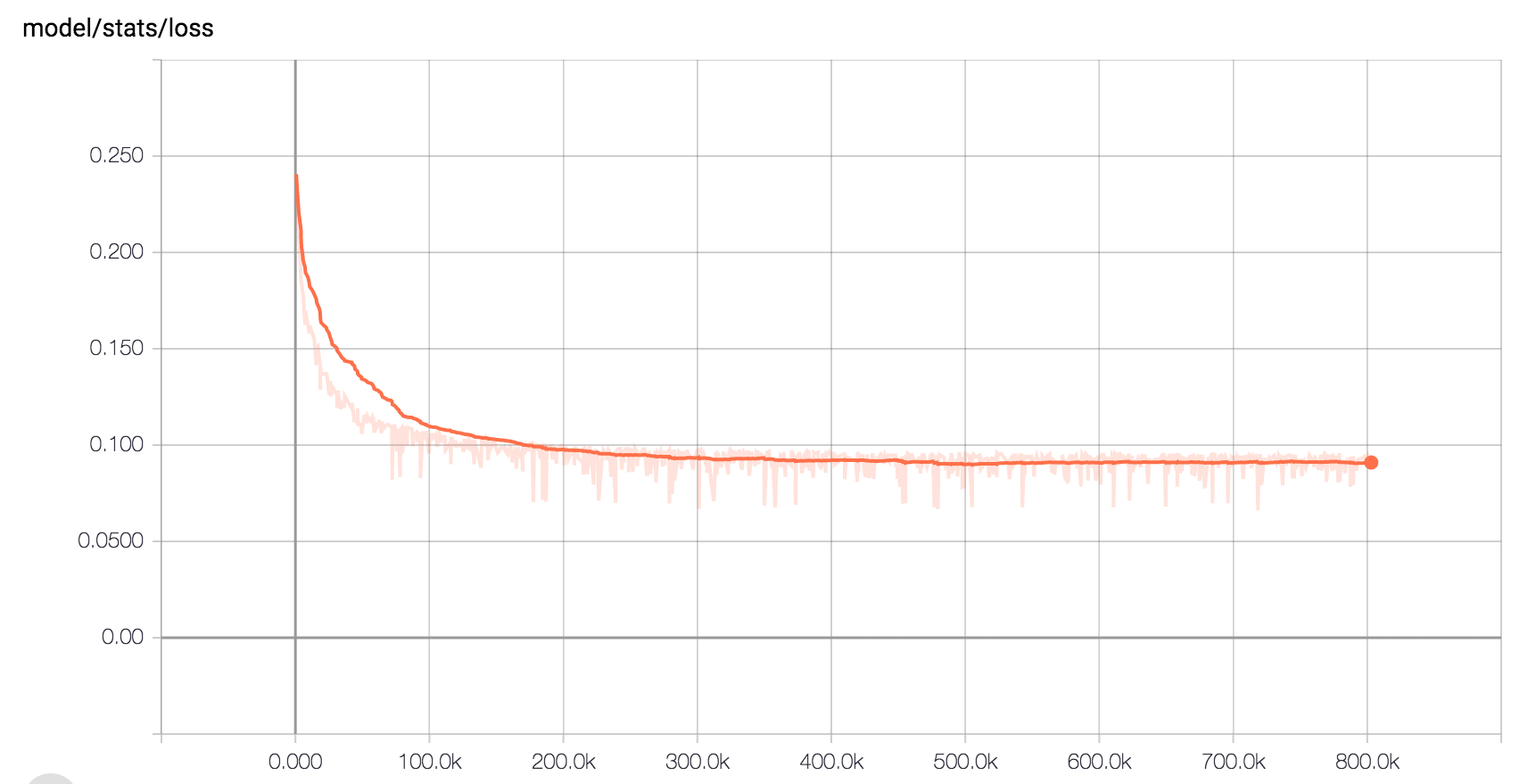

@keithito can you please share the loss curve of your Tacotron2 model? |

|

Hi @MXGray - the model is trained with the hybrid Tacotron 1/2, the same code that's checked into the tacotron2-work-in-progress branch. Not sure what's going wrong, but the naming is a little bit funny because the directory and checkpoint name are the same. I just verified that the following works: |

|

@rafaelvalle: Here you go. The smoothing is 0.98. |

|

@keithito thanks a lot! Did the loss explode at any time? |

|

No loss explosion. I did not try the learning rate scheme in the paper -- I suspect it would not make a huge difference from starting the decay at step 0. |

|

Output of tacotron 1: Output of tacotron 2: Outputs of tacotron 1 are clearer than tacotron2's outputs, but tacotron1's outputs are easily interrupted. |

|

@keithito Hi, Your works is very helpful to me. I really appreciate your works. |

|

No, I used the default (5). I'm not sure if it will make that much of a difference when integrating wavenet -- you're still generating the same number of mel spectrogram frames regardless of the reduction factor. |

|

I see. Guess I'll have to try with r=1 :-) |

|

@keithito does tacotron 2 implements in the given. branch |

|

Just to follow up, there are some great Tacotron 2 implementations here: If you're interested in Tacotron 2, please use one of them. |

How to run your model? Key |

|

Hey I am getting some errors in implementation of eval.py or demo_server.py. whenever i execute eval.py. encountered following errors. Traceback (most recent call last): Same type of error occurs when I run through other method like below. Hyperparameters: During handling of the above exception, another exception occurred: Traceback (most recent call last): |

@keithito A few days ago, Google has published Tacotron2. Do you have a plan to achieve it?

The text was updated successfully, but these errors were encountered: