-

Notifications

You must be signed in to change notification settings - Fork 17.9k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

runtime: dead Gs cause span fragmentation #9869

Comments

|

Rephrased as "dead Gs cause span fragmentation". It's not clear we can easily free dead G's - it's hard to be sure all the pointers to them have been found. I expect there is code in the runtime that depends on G's never being freed (reused to hold other types of data). I don't want to debug that. How big a problem is the fragmentation? |

|

The fragmentation can be pretty bad, the case in #8832 makes the heap into a repeated pattern of one page allocated (for Gs) and eight pages free (once used for stacks). So although the heap is ~90% free, we'd have to grow the heap for any large allocation. That said, I don't think it is terribly common to use lots of Gs for a while and then never use them again. I agree this may be hard. But it is something to think about if we can find a way. |

Most network servers tend to create a new goroutine per incoming request (E.g., the HTTP server). If there's a sudden huge spike in requests (often not intentional, but due to a misbehaving external service), it can cause a huge number of goroutines to be created, and even after the requests have been served, the process consumes a huge amount of memory. I'm assuming this is due to the fragmentation described in this issue. As an example, we saw one process in production with a system usage of > 100GB, while the Go runtime thought it was using < 4GB. |

|

@mandarjog : Why do you think this is related to fragmentation? This issue is about what happens after all those goroutines complete. The syndrome I would expect to see is that the heap is mostly unused, including having little memory used by stacks, but large allocations still grow the heap. |

|

After the go routines are done, I expect the usage to come down. https://user-images.githubusercontent.com/18554027/31787111-a33ae35e-b4d8-11e7-9cf1-2410427c1473.png We see here that externally measured memory comes back down, but does not go all the way down. |

|

Once a G is allocated, it is never freed, so yes the number of Gs at any point is the high water mark of the execution so far. This issue is unlikely to affect the amount of memory used as reported by the OS. The fragmentation described in this issue is only for virtual addresses - all the holes described here can (and are) given back to the OS by the scavenger. (Unless you're running on an OS where the page size is >8K). If you'd like to continue investigating, please open a new issue. |

|

I'm seeing this issue in an application I wrote as well. It is an HTTP server which essentially kicks off a specialized background web crawler. A "scan" request comes to the http server which throws the crawler into a goroutine and responds to the http request with "scan started successfully". Unfortunately, once it allocates memory, it never releases it no matter how long I wait. I wrote a simple program to show what is happening below. I posted on golang-nuts and was led here. Below is my post in its entirety. For me, this is a showstopper issue. If Go won't release memory from a very simple goroutine and it takes up gigs of memory (on my production app), I'll have to rewrite using something else. Golang-nuts post: To illustrate the issue I've written a simple example below. When I run this on my Windows system, I get 800MB to 1 GB of memory consumption. What's fascinating is that if I change the time.Sleep to 100 milliseconds instead of 1, the process never goes above 6 MB of memory. This is what I would expect since there are no objects here that should be retained in memory. And, it doesn't matter how long I wait, the garbage collector never cleans up the mess either. I've tried to profile it using pprof and it didn't help me. It helped me find and fix other issues, but not this. It's entirely possible I did it wrong though since I am new to using that tool. Help! Thanks in advance! |

|

@robarchibald I'm pretty sure what you are seeing is not related to this issue. Please open a new one. The reason I'm pretty sure is that the space used is all for bytes.Buffer, it isn't for the goroutine descriptors or their stacks. Even if we retained the descriptors and their stacks, it is only ~8MB (stacks on Windows are 8K to start). How are you measuring the memory used by the process? The Go runtime should give all the unused memory back to the OS after 5 minutes. Although I admit I'm unsure how this is handled on Windows. The difference between a 1ms sleep and a 100ms sleep is probably that in the latter, the each goroutine finishes before the next one is generated, so there is only one goroutine at a time. In the former, there are lots of goroutines simultaneously, each one using almost 1MB for its buffer. |

|

Thanks @randall77. I'm measuring memory using the OS. The results are the same whether in Ubuntu 16.04 (production) or Windows 10 (my dev box). And, I specifically set the time.Sleep to 10 minutes because I know that Garbage collection is supposed to cleanup after 5 minutes. Plus, I explicitly call runtime.GC() immediately in case I could get it to collect the trash earlier. No dice either way. Go never releases the memory even after waiting a week. Sorry, I wasn't patient enough to wait longer than that. :) I can certainly open a new issue, but this isn't an issue with bytes.Buffer. This is a problem with goroutine cleanup. Sure, this particular issue was opened because the descriptor and stack wasn't cleaned up, but I think I'm showing here that the issue is MUCH bigger than that. Nothing is getting cleaned up if the system is busy enough or if the parallelism is high enough... at least that's what I'm assuming here since I can change the delay to be longer and the problem goes away. It isn't a bytes.Buffer issue. It isn't an ioutil.WriteFile issue. I only used bytes.Buffer for this example and that's not what I'm using in my real application. And, I can do a fmt.Println instead of ioutil.WriteFile and it still does the same thing too. As @prashantv mentioned, he's seen 100 GB of memory usage due to what sounds like a similar issue to mine. |

|

@6degreeshealth Show us exactly how you are measuring memory. Ideally on Ubuntu. Don't use words to tell us what you are doing, show us the exact commands that you are using, and the exact output that you see. Thanks. |

|

On Linux, I'm using Here's what I see on Windows. This is after it's gone idle. Nothing new is being spawned. It's just sitting there. It stays like this for 10 minutes until the program closes. |

|

I've opened #22439 to discuss @6degreeshealth 's issues. |

|

I'm seeing a similar problem with a golang program . The program spawns a good number of goroutines throughout the day . I've noticed a 200mb increase in memory hold at the end of the day. pprof heap returns :

200mb pileup on a daily basis is a huge amount . Is there any update on this issue ? Any possible workarounds? |

|

@prashanthjbabu |

|

@randall77 |

|

@prashanthjbabu I guess it is possible your stacks are being freed, and the space use you're seeing is just G descriptors. That would take close to 1 million goroutines. Do you have that many goroutines live at once? Or perhaps you have a goroutine leak? |

|

@randall77 |

|

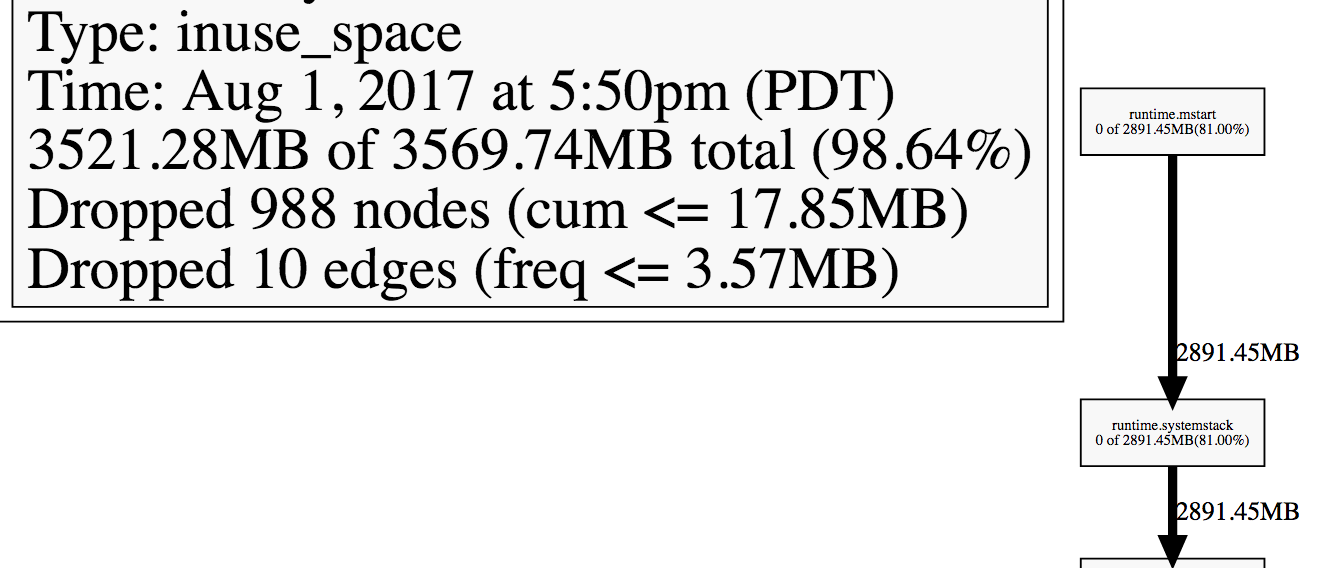

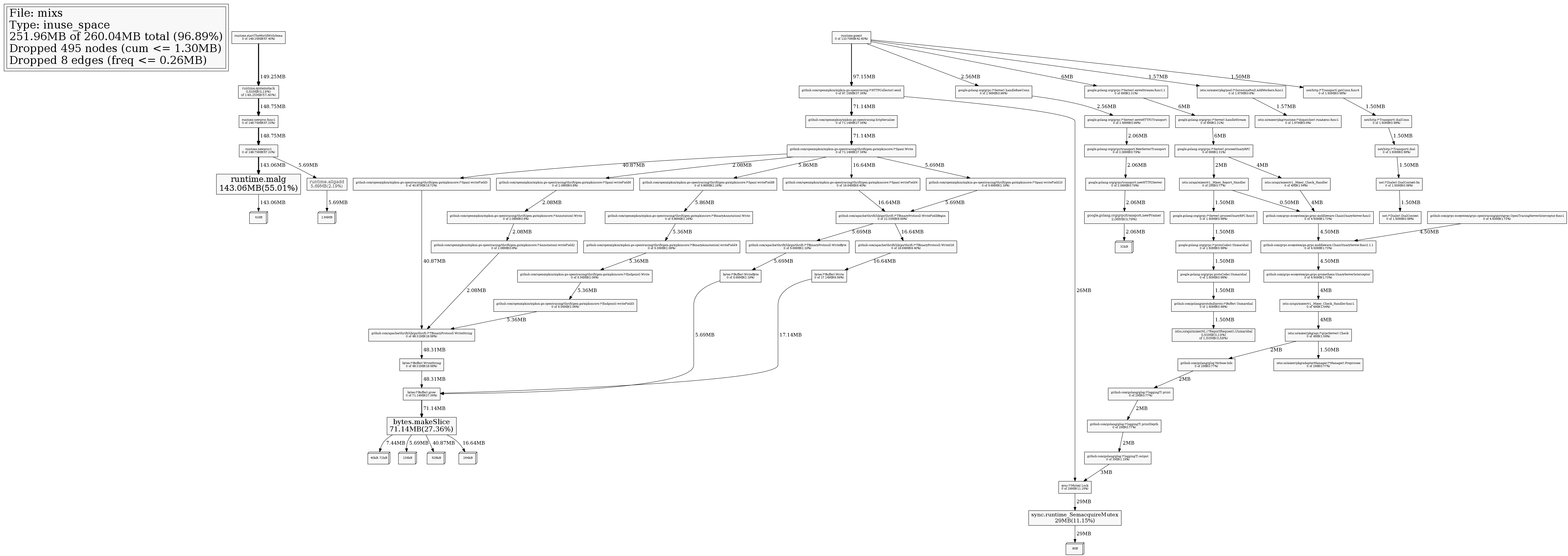

I think I have the similar picture in my go web app: Maybe I am wrong, but I don't see anything strange: My pprof inuse_space report: The "curious" thing that I don't see, which process consumes 1.5Gb... I've added another handler with But triggering the handler makes no effect. I have been waiting for several days, but my memory wasn't returned to OS. Appreciate any suggestion how to release the memory :) |

Once we allocate a G, it is allocated forever. We have no mechanism to free them.

We should free dead Gs if they sit in the global free queue for long enough. Or maybe free all of them at each GC?

I noticed this while debugging #8832. The stacks for dead Gs are freed at GC time. This leads to a fragmented heap because spans for G storage and stack storage alternate in the heap. When only the stacks are freed, the resulting free spans won't coalesce because the spans for storing the Gs aren't freed.

The text was updated successfully, but these errors were encountered: