diff --git a/docs/images/perf_rdma_resnet50.png b/docs/images/perf_rdma_resnet50.png

deleted file mode 100644

index e4bcce8ae12c..000000000000

Binary files a/docs/images/perf_rdma_resnet50.png and /dev/null differ

diff --git a/docs/images/perf_rdma_vgg16.png b/docs/images/perf_rdma_vgg16.png

deleted file mode 100644

index 49d0d1a2c98c..000000000000

Binary files a/docs/images/perf_rdma_vgg16.png and /dev/null differ

diff --git a/docs/performance.md b/docs/performance.md

index 11a84ab3f316..8e10c3afe31a 100644

--- a/docs/performance.md

+++ b/docs/performance.md

@@ -1,12 +1,28 @@

# BytePS Performance with 100Gbps RDMA

+## NVLink + RDMA

+

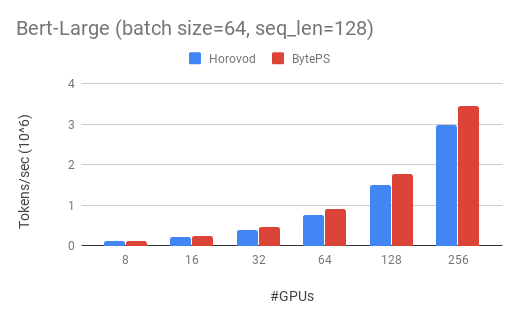

+We show our experiment on BERT-large training, which is based on GluonNLP toolkit. The model uses mixed precision.

+

+We use Tesla V100 32GB GPUs and set batch size equal to 64 per GPU. Each machine has 8 V100 GPUs (32GB memory) with NVLink-enabled.

+Machines are inter-connected with 100 Gbps RoCEv2 network.

+

+BytePS outperforms Horovod (after carefully tuned) by 16% in this case, both with RDMA enabled.

+

+

+

+## PCIe + RDMA

+

Note: here we present the *worse case scenario* of BytePS, i.e., 100Gbps RDMA + no NVLinks.

We get below results on machines that are based on PCIe-switch architecture -- 4 GPUs under one PCIe switch, and each machine contains two PCIe switches.

The machines are inter-connected by 100 Gbps RoCEv2 networks.

In this case, BytePS outperforms Horovod (NCCL) by 7% for Resnet50, and 17% for VGG16.

-

+

+

+

+

To have BytePS outperform NCCL by so little, you have to have 100Gbps RDMA network *and* no NVLinks. In this case, the communication is actually bottlenecked by internal PCI-e switches, not the network. BytePS has done some optimization so that it still outperforms NCCL. However, the performance gain is not as large as other cases where the network is the bottleneck.

+

+

+

+

To have BytePS outperform NCCL by so little, you have to have 100Gbps RDMA network *and* no NVLinks. In this case, the communication is actually bottlenecked by internal PCI-e switches, not the network. BytePS has done some optimization so that it still outperforms NCCL. However, the performance gain is not as large as other cases where the network is the bottleneck.

+

+

+

+

To have BytePS outperform NCCL by so little, you have to have 100Gbps RDMA network *and* no NVLinks. In this case, the communication is actually bottlenecked by internal PCI-e switches, not the network. BytePS has done some optimization so that it still outperforms NCCL. However, the performance gain is not as large as other cases where the network is the bottleneck.

+

+

+

+

To have BytePS outperform NCCL by so little, you have to have 100Gbps RDMA network *and* no NVLinks. In this case, the communication is actually bottlenecked by internal PCI-e switches, not the network. BytePS has done some optimization so that it still outperforms NCCL. However, the performance gain is not as large as other cases where the network is the bottleneck.