Using baal to get active learning samples for single label classification #181

Replies: 1 comment

-

|

Hello, I might be missing something, but can we get the accuracy/loss on the held-out set as well? In addition, Entropy would find high aleatoric examples such as outliers where the model is more prone to be incorrect. MC-Dropout + BALD is able to detect items near the decision boundary, but this wont get the noisy/outliers examples. Also yes Bayesian deep learning has often a worse accuracy, but a better calibration overall. link It's an interesting tradeoff. Comparing approaches in Active learning is especially hard, especially on academic datasets. How clean is your dataset? We find that BALD is especially strong on "industry datasets" where the dataset is not well curated. We can schedule a meeting if you would like :) PM me on Slack or on my personal email. |

Beta Was this translation helpful? Give feedback.

-

Hello team

Good Evening!

We are trying to use baal to do sampling of images for the image classification problem statement. We have trained a single label classification model (10 classes) using fastai.

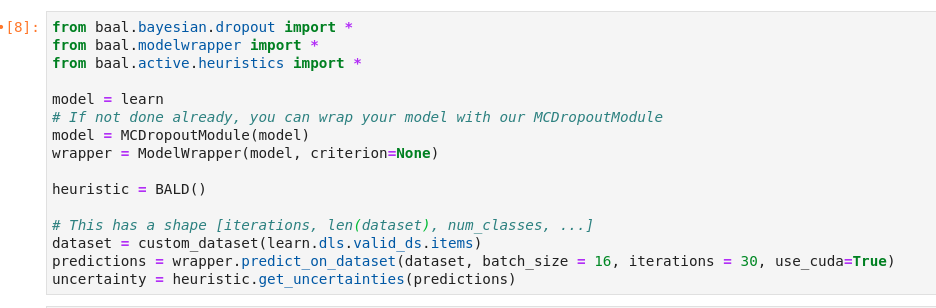

We wanted to check the benefit of using baal. So,as seen below, the trained model is used for getting predictions on a dataset which is labelled (assume that this is going to be our new dataset which needs to be labelled, but we know the labels beforehand for this particular set). Using them and the heuristic as BALD, we get our uncertainties.

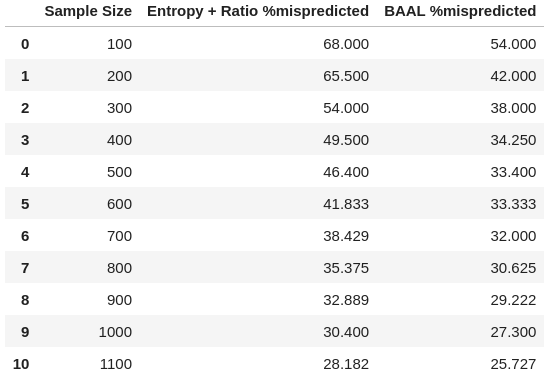

Next, we performed (entropy + ratio) sampling and (bald uncertainty scores) sampling and within these samples, we found out what is the % of images which are picked that would be mispredicted by our model and we got the following table

What we see is that the proportion of mispredicted samples which baal picked up are quite low as compared to the (entropy + ratio) sampling technique.

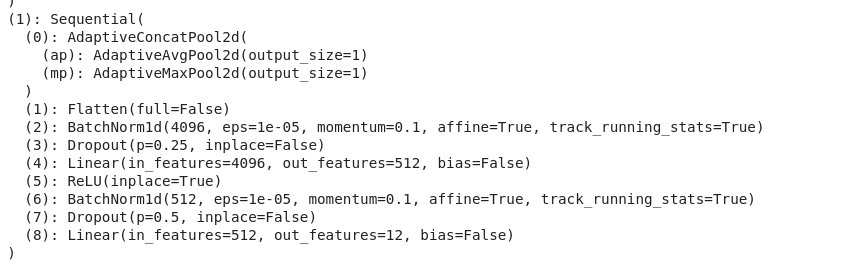

We have used a resnet50 model with a custom head which has two dropout layers, here is our classification head for your reference which we have wrapped around MCDropout module. In the resnet50 backbone there are no dropout layers.

We also observed with predictions using MC Dropout there was a 2% drop in accuracy level from 91% to 89% overall on the entire dataset. We repeated this experiment using iterations = 10,20,30 but the accuracy didn't budge over 1% and stayed at 89% for predictions done using the wrapper.

Can you advise how could we use baal to the fullest for obtaining samples which are most likely to be mispredicted?

Beta Was this translation helpful? Give feedback.

All reactions