-

Notifications

You must be signed in to change notification settings - Fork 3.3k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[FR] Neural prediction ignores end date (no backtesting) #1088

Comments

|

Yeah I intentionally don't use the end date for NN (should definitely remove). The reason being I added in sample validation to see how the model does at various points instead of just cherry picking the last N days. As for Monte Carlo- why would you want an end date flag? That is a forecasting method and taking away last data points changes the distribution you sample from. One instead can look at (for example) how the distribution of returns changes over different periods of time. |

|

I don't get it. I think it's important to be able to pick the dates so we can backtest. Visualizing such results is a very important step in my opinion and I think it currently is kinda useless without me being able to see that validiation visually. Monte Carlo: If I have data from 2021-01-01 to 2021-12-31 I want to run simulation on data from 2021-10-01 simulating until 2021-12-31. I want to use the highest frequency case(s) (?) occuring during Monte Carlo to be plotted compared to the actual price line of 2021-10-01 to 2021-12-31 if that makes sense |

|

Is there any commit where I can backtest (where end-date is passed) for crypto? If so I'll revert that commit in my local checkout |

|

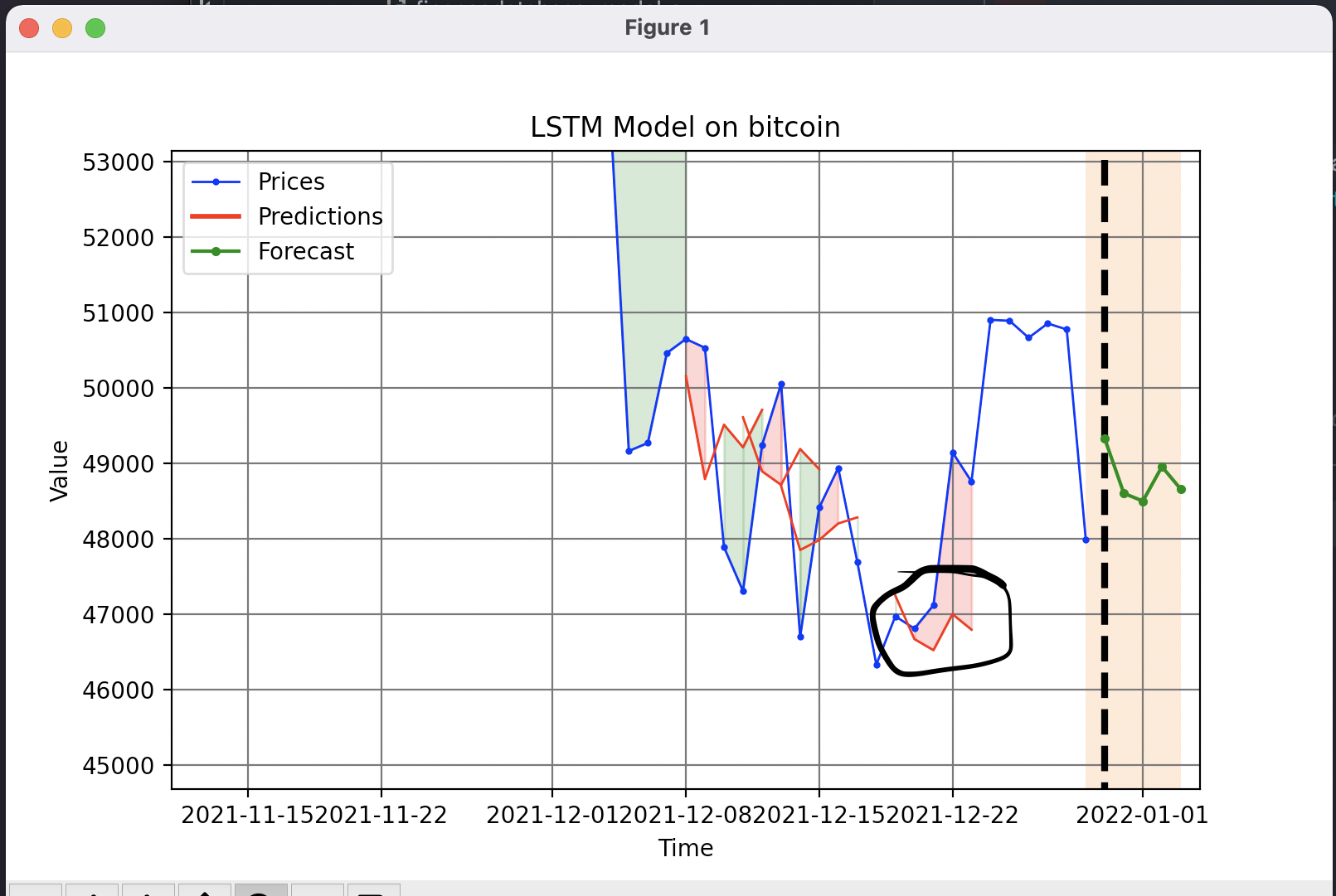

So any commit that was before May 5th will have an older version of the prediction. You could load bitcoin through yahoo finance using As for the backtesting/validation visualization, if you run the default LSTM (for example), you will get a plot like: This is zoomed in, but there are regions of red/green areas along with red curves (like what I circled above). This is an in sample validation, which can be interpreted as a "backtest" on that data. One can see the model predicted it to slightly go down, but in reality it jumped from 47 to 49k. The points that are used for validation are randomly selected as to not add any bias and the split for validation can be changed using the -v flag. |

|

@jmaslek we can re-add the |

|

@martinb-bb does our PR fix this bug? |

|

Our PR does automatic backtesting but does not fix this bug.the PR assumes the user wants to test the whole series they load in. @colin99d we should probably add in functionality to set start and end dates and have it slice the dataset before converting to a timeseries object. |

|

#1933 Should resolve this issue with the new overhaul of forecasting models and backtesting capabilities. |

I installed it using conda using the preset provided. I use the git upstream.

I tried backtesting with the neural networks (lstm, rnn, mlp) by adding --end-date (-e) 2021-10-10 -d 30 but all of them seem to ignore the flag (seeing from the code --end-date isn't being passed anywhere). This makes it impossible to backtest using the neural networks since they'll just spit out the latest data. It works fine for all other prediction techniques. Monte carlo doesn't seem to provide any end-date (backtest) flag anyway which it definetely should aswell (comparing the frequency of the mc results with the actual price)

The text was updated successfully, but these errors were encountered: