-

Notifications

You must be signed in to change notification settings - Fork 87

Lipsync

VTube Studio can use your microphone to analyze your speech and calculate Live2D model mouth forms based on it.

You can select two lipsync types:

-

Simple Lipsync

- Legacy option, Windows-only, based on Occulus VR Lipsync

- NOT RECOMMENDED, use Advanced Lipsync instead.

-

Advanced Lipsync:

- Based on uLipSync

- Fast and accurate, can be calibrated using your own voice so it can accurately detect A, I, U, E, O phonemes.

- Available on all platforms (desktop and smartphone).

Note: "Simple Lipsync" will not be discussed here. If you're still using it, please consider moving on to "Advanced Lipsync". It supports the same parameters and more, is more performant and works on all platforms.

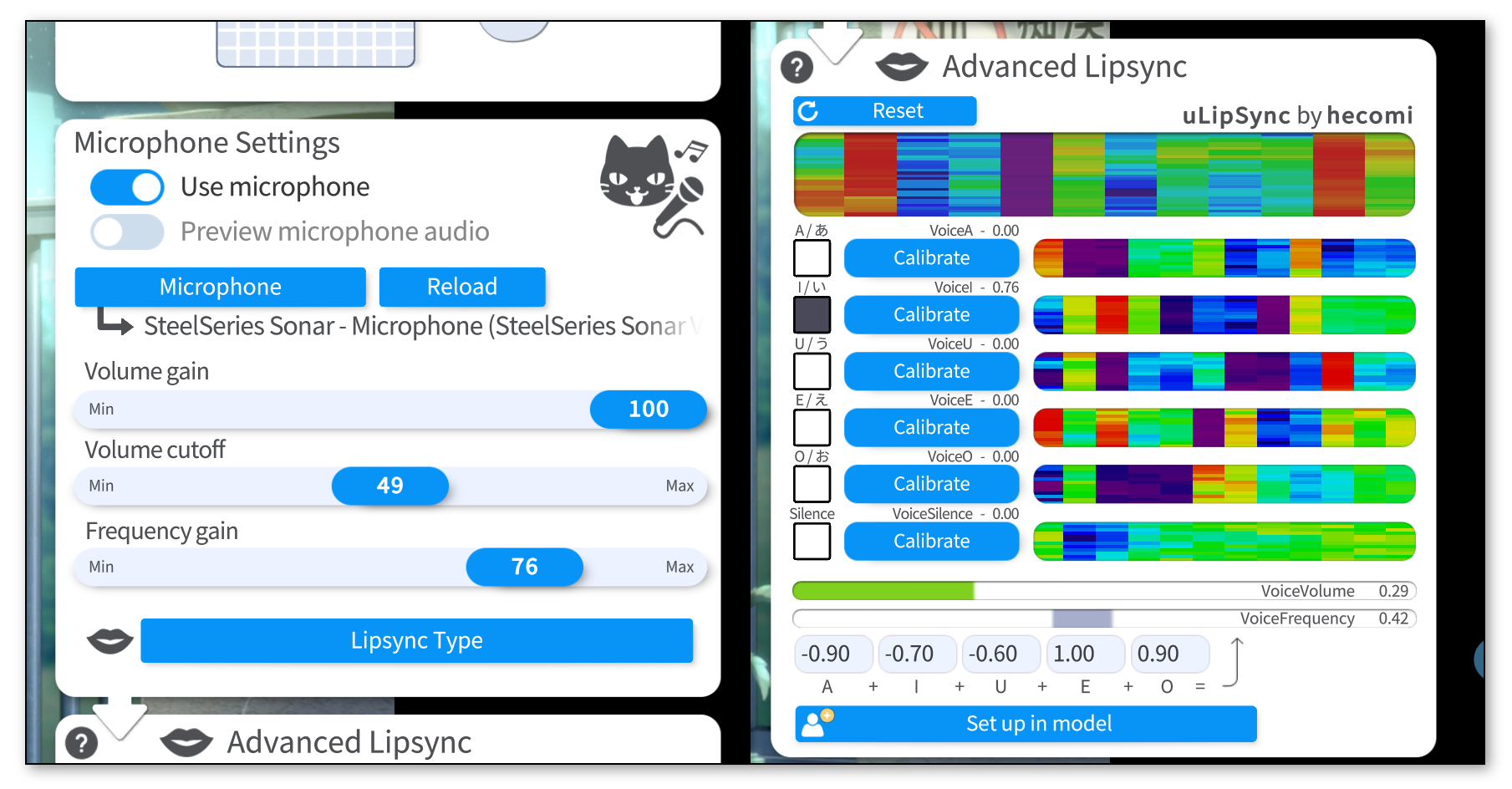

To use Advanced Lipsync select it using the "Lipsync Type" button. Then select your microphone and make sure "Use microphone" is on.

There are three slider on the main config card:

-

Volume Gain: Boost volume from microphone. Will have an effect on the

VoiceVolumeandVoiceVolumePlusMouthOpenparameters. - Volume Cutoff: Noise gate. Eliminates low-volume noise. It's probably best to keep this low or at 0 and have a noise-gate before audio is fed into VTube Studio instead.

-

Frequency Gain: Boost value for

VoiceFrequencyandVoiceFrequencyPlusMouthSmile. Those parameters are explained below.

If your microphone lags behind, you can click the "Reload" button to restart the microphone. You can also set up a hotkey for that.

To calibrate the lipsync system, click each "Calibrate" button while saying the respecting phoneme until the calibration is over. That way, the lipsync system will be calibrated to your voice. If you change your microphone or audio setup, you might want to redo the calibration.

Clicking "Reset" will reset the calibration to default values.

Make sure the calibration is good by saying all phonemes again and checking if the respective phoneme lights up on the UI.

The funny colorful visualizations shown next to the calibration buttons are related to the frequency spectrum recorded in your voice during calibration. If you'd like to learn more about the details, check the uLipSync repository.

The lipsync system outputs the following voice tracking parameters:

-

VoiceA- Between 0 and 1

- How much the

Aphoneme is detected.

-

VoiceI- Between 0 and 1

- How much the

Iphoneme is detected.

-

VoiceU- Between 0 and 1

- How much the

Uphoneme is detected.

-

VoiceE- Between 0 and 1

- How much the

Ephoneme is detected.

-

VoiceO- Between 0 and 1

- How much the

Ophoneme is detected.

-

VoiceSilence- Between 0 and 1

- 1 when "silence" is detected (based on your calibration) or when volume is very low (near 0).

-

VoiceVolume/VoiceVolumePlusMouthOpen- Between 0 and 1

- How loud the detected volume from microphone is.

- Map this to your

ParamMouthOpenLive2D parameter.

-

VoiceFrequency/VoiceFrequencyPlusMouthSmile- Between 0 and 1

- Calculated based on the detected phonemes. You can set up how the phoneme detection values are multiplied to generate this parameter.

- Map this to your

ParamMouthFormLive2D parameter.

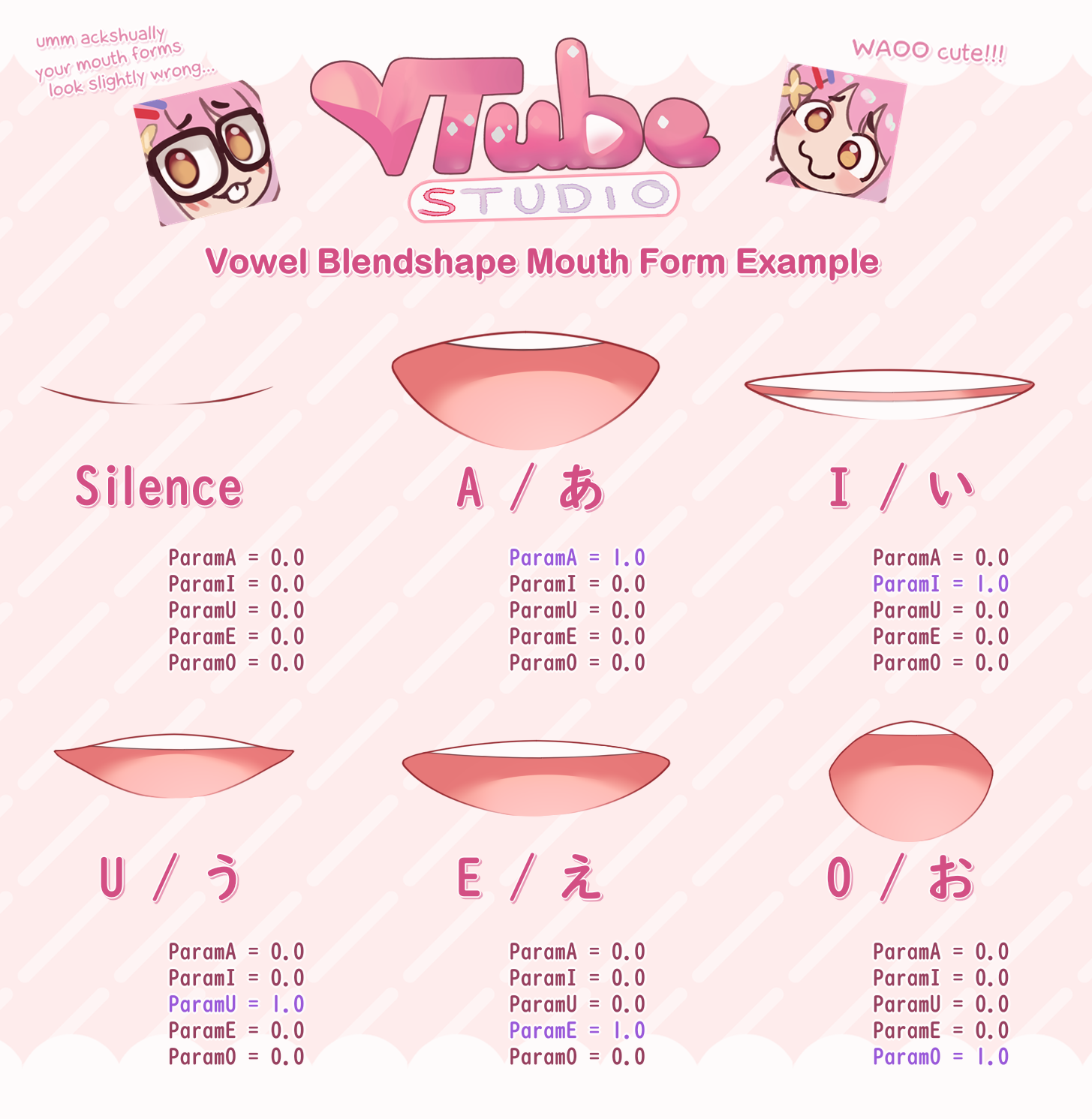

The VoiceA/I/U/E/O should be mapped to blendshape-Live2D-parameters called ParamA, ParamI, ParamU, ParamE and ParamO that deform the mouth to the respective shape.

Here's a rough reference for how you could set up your blendshape mouth forms to get the most out of the advanced lipsync setup. Depending on what your model/artstyle looks like, your mouth forms may look different.

![]() If you have any questions that this manual doesn't answer, please ask in the VTube Studio Discord

If you have any questions that this manual doesn't answer, please ask in the VTube Studio Discord![]() !!

!!

- Android vs. iPhone vs. Webcam

- Getting Started

- Introduction & Requirements

- Preparing your model for VTube Studio

- Where to get models?

- Restore old VTS Versions

- Controlling multiple models with one device

- Copy config between models

- Loading your own Backgrounds

- Recoloring Models and Items

- Record Animations

- Recording/Streaming with OBS

- Sending data to VSeeFace

- Starting as Admin

- Starting without Steam

- Streaming to Mac/PC

- VNet Multiplayer Overview

- Steam Workshop

- Taking/Sharing Screenshots

- Live2D Cubism Editor Communication

- Lag Troubleshooting

- Connection Troubleshooting

- Webcam Troubleshooting

- Crash Troubleshooting

- Known Issues

- FAQ

- VTube Studio Settings

- VTS Model Settings

- VTube Studio Model File

- Visual Effects

- Twitch Interaction

- Twitch Hotkey Triggers

- Spout2 Background

- Expressions ("Stickers"/"Emotes")

- Animations

- Interaction between Animations, Tracking, Physics, etc.

- Google Mediapipe Face Tracker

- NVIDIA Broadcast Face Tracker

- Tobii Eye-Tracker

- Hand-Tracking

- Lipsync

- Item System

- Live2D-Items

- Between-Layer Item Pinning

- Item Scenes & Item Hotkeys

- Add Special ArtMesh Functionality

- Display Light Overlay

- VNet Security

- Plugins (YouTube, Twitch, etc.)

- Web-Items

- Web-Item Plugins